Crafting Responsible AI: What Happens When Legal, Product & Values Align

Crafting Responsible AI: What Happens When Legal, Product & Values Align

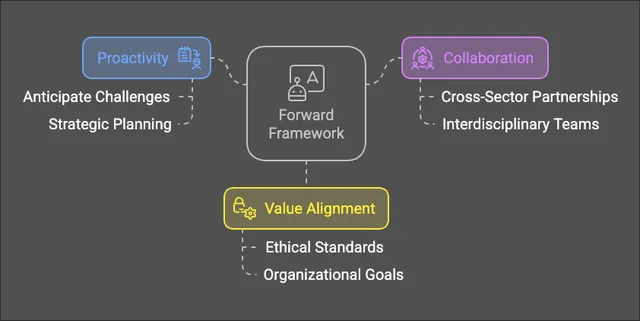

AI is already present, not just a future prospect. It brings a tangled mix of innovation, risks, and responsibilities that no single team can handle alone. In 2024, I facilitated a series of cross-team discussions focused on creating practical and responsible AI policies. The key insight? We need a new strategy based on three core principles—Proactivity, Collaboration, and Value Alignment. 🚀

Introducing the Forward Framework for AI Policy—a strategic tool to manage legal, operational, and cultural challenges while fostering innovation.

Here’s a brief overview of our discussion points:

🔍 Start with Culture

Your policy is only as strong as your understanding of the organization's risk tolerance. Both enthusiasm and skepticism towards AI are equally warranted and should be appropriately considered in policy formulation.

🛠 Leverage What You Have

Avoid redundant efforts by building upon existing compliance and governance frameworks. Utilize sandboxes to facilitate safe learning environments, implementing strict controls on sensitive data.

📚 Clarify What “AI” Means

Not all artificial intelligence systems are equivalent. Whether predictive, generative, or of another type, the specifics are significant—equally important are the practices and assurances provided by your vendors.

🔁 Design for Iteration

Policy is not a one-time implementation. Establish feedback mechanisms, communicate expectations effectively, and align with teams at their current levels. Engagement fosters trust.

🚨 Escalate Wisely, Balance Bravely

Establish clear protocols for issue management; however, do not permit risk aversion to impede advancement. Strategic risk remains essential for business progression.

Bottom line? The most effective AI strategies are grounded in empathy, clarity, and structure. They evolve alongside the tech—and the people using it.

👉 Watch the full discussion series here:

Part 1:

Part 2:

Part 3: