When autonomous AI creates liability, you can't explain

The WEF and Capgemini framework tackles how to deploy AI agents that act independently without creating liability exposure you can't defend. When autonomous agents execute without human approval, your organization owns the outcome directly.

The WEF and Capgemini released a framework in November that tackles how to deploy AI agents that act independently without creating liability exposure you can't defend.

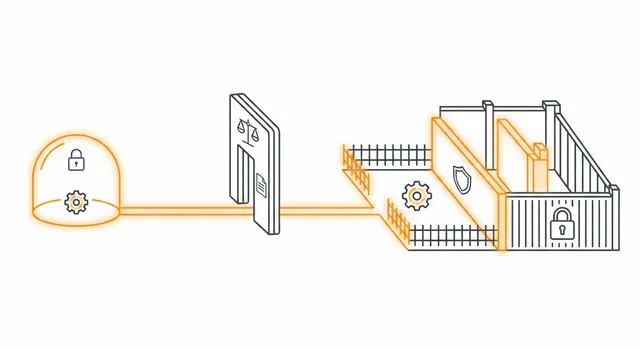

When autonomous agents execute transactions without human approval, your organization owns the outcome directly. The framework provides three deployment gates.

First is containment during pilots. Test new agent capabilities in environments where failure is recoverable. Understand how the system actually behaves before expanding its authority.

Second is evidence-based iteration. Moving an agent from pilot to production depends on documented performance against pre-defined metrics.

Third is proportionate safeguards. An agent handling public data operates under different constraints than one managing customer financial information.

For product managers, every time you grant an agent new authority—access to different data sources, ability to execute transactions, integration with external systems—you need a defensible record connecting the capability, the risk analysis, and the safeguards implemented.

For counsel, the framework provides three control mechanisms: mandatory sandboxing policy with minimum duration requirements, go/no-go decision gates requiring legal approval based on performance data, and risk-based policy tiers rather than categorical restrictions.

Why this matters: when an agentic system creates harm—unauthorized transactions, data exposure, discriminatory outcomes—you need to demonstrate you deployed deliberately.

"Deliberately" means you documented the risks, implemented proportionate controls, and gathered evidence at each expansion stage that justified increasing autonomy.

Agent failures create different liability patterns. The organization that deployed an autonomous agent owns the outcome more directly than with recommendation systems. The framework's staged approach creates decision points where you explicitly chose to expand agent authority based on documented evidence—the record you need when defending that choice.

https://www.weforum.org/publications/ai-agents-in-action-foundations-for-evaluation-and-governance/