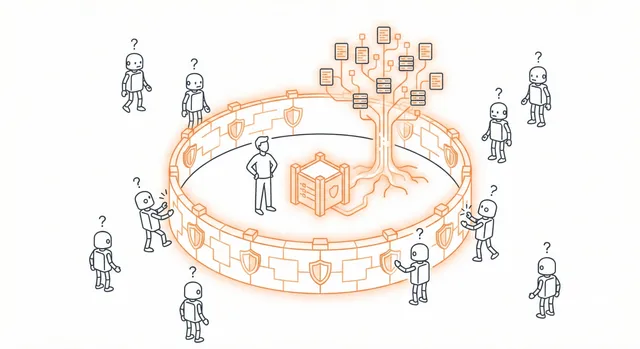

Your company just licensed the same AI tools as your competitors. Same models. Same infrastructure. Same vendor capabilities.

So what makes your legal team's work defensible?

A recent investment analysis examining AI's "Fifth Wave" reveals the answer: when everyone has access to the same AI, the only defensible advantage is proprietary data competitors can't replicate.

The video presents three examples:

A debt collection company's moat isn't the AI—it's knowing Missouri statutes versus California statutes in real-time, trained on millions of compliant calls. Competitors can license the same AI models, but they can't access that regulatory compliance data.

A plaintiff attorney platform's moat is case outcome data showing which arguments win and for how much. Public models know the law. This system knows which strategies work in practice. Private data competitors can't access.

A legal research company aggregated 26 years of Spanish legal records. Revenue quintupled after adding AI. The moat isn't the AI—it's the data that foundation models can't access.

The pattern: these companies win because they have proprietary knowledge in domains where regulatory complexity creates barriers.

For corporate legal teams, the equivalent question is stark: do you have institutional legal knowledge that makes AI more effective for your business than a competitor could achieve using the same vendor tools?

The Institutional Knowledge Problem

Every in-house legal team has proprietary knowledge:

- Which contract terms work for your specific business model

- How your leadership team actually makes risk decisions

- Which regulatory requirements matter for your specific products

- What legal positions you've taken on gray-area issues

- How cross-functional workflows actually operate

The question isn't whether you have this knowledge. You do. The question is whether it lives in your systems or just in people's heads.

Three Types of Knowledge That Create Moats

1. Decision History With Context

Every time your legal team makes a decision—accepting a contract term, approving a product feature, defining a compliance standard—that's proprietary data about how legal risk operates at your company.

Most companies lose this knowledge. The decision gets made, the work moves forward, and the reasoning disappears into email threads and Slack conversations.

Companies building defensible legal capabilities capture decisions with enough context that AI can learn from them:

- Not just "we approved this contract"

- But why we approved it, what alternatives we considered, what risks we accepted, what protections we negotiated

When your AI suggests a contract clause, does it know which similar clauses your team has accepted, which you've rejected, and why? That's the moat.

2. Product-Specific Risk Frameworks

Generic AI knows contract law and regulatory requirements. It doesn't know your company's specific risk tolerance, product constraints, or go-to-market strategy.

Your legal team does. The question is whether that knowledge is documented in ways that make AI more effective.

Which contract terms actually work for your specific business model? Which regulatory requirements apply to your specific products? Which legal risks does your leadership accept versus avoid?

If that knowledge exists only in individual lawyers' experience, it disappears when people leave. If it's captured systematically, it becomes infrastructure that makes AI exponentially more valuable.

3. Cross-Functional Workflow Intelligence

AI can review contracts. But knowing which contracts need engineering review, which need security approval, which need executive signoff—that's institutional knowledge.

AI can identify regulatory requirements. But knowing how those requirements interact with your product roadmap, your engineering architecture, your sales process—that's expertise.

Most companies handle this through institutional memory and personal relationships. That works until people leave or teams scale.

Companies treating this as defensible infrastructure document not just what decisions get made, but how decisions flow through the organization. The routing logic. The stakeholder map. The escalation criteria.

That workflow intelligence—captured and made AI-accessible—is what competitors can't replicate by just licensing the same vendor tools.

The Moat Question Every Legal Leader Should Ask

The video warns: "Your margin is my opportunity. If you just wrap a model, someone can 'vibe code' a competitor in a weekend."

For in-house legal teams:

If your AI strategy is "we bought Harvey," that's not a moat. Another company can buy Harvey next quarter and achieve the same results you do.

If your AI strategy is "we've captured 10 years of legal decisions with context, organized by product line and regulatory regime, with validated precedent and workflow routing logic that reflects how decisions actually get made here"—now you've built something defensible.

What This Means Monday Morning

Every legal decision your team makes this week will happen whether or not you capture it systematically.

The question is: six months from now, when AI needs to help your team handle a similar situation, will that institutional knowledge exist in a form AI can use?

If your answer is "it'll be in someone's head," you're not building a moat. You're building dependency on individuals who will eventually leave.

If your answer is "it'll be captured with context in systems that make our AI smarter about our specific business," you're building defensible capability that compounds over time.

The legal teams that dominate the next decade won't be the ones with the best vendor tools. They'll be the ones who understand that when everyone has access to the same AI models, the moat is institutional knowledge competitors can't access.

Not because you won't share it. Because they can't replicate the years of decisions, the product-specific frameworks, the workflow intelligence that makes AI actually useful for your specific business.

That's the only moat that matters.

This is strategic analysis, not legal advice for your specific situation. AI governance requirements vary by organization and industry.

For the full story, and to learn more about AI, Innovation and the law, click on the website in my bio

#ProductCounsel #InHouseLegal #AIStrategy #LegalOperations #InstitutionalKnowledge

https://youtu.be/3XVDtPU8xKE?si=lSO0hiZ4k4G0bHyk**For the full story, and to learn more about AI, Innovation and the law, click on the website in my bi#ProductCounsel #InHouseLegal #AIStrategy #LegalOperations #InstitutionalKnowledgeThis is strategic analysis, not legal advice for your specific situation. AI governance requirements vary by organization and industry.That's the only moat that matters.Not because you won't share it. Because they can't replicate the years of decisions, the product-specific frameworks, the workflow intelligence that makes AI actually useful for your specific business.The legal teams that dominate the next decade won't be the ones with the best vendor tools. They'll be the ones who understand that when everyone has access to the same AI models, the moat is institutional knowledge competitors can't access.If your answer is "it'll be captured with context in systems that make our AI smarter about our specific business," you're building defensible capability that compounds over time.If your answer is "it'll be in someone's head," you're not building a moat. You're building dependency on individuals who will eventually leave.The question is: six months from now, when AI needs to help your team handle a similar situation, will that institutional knowledge exist in a form AI can use?Every legal decision your team makes this week will happen whether or not you capture it systematically.What This Means Monday MorningIf your AI strategy is "we've captured 10 years of legal decisions with context, organized by product line and regulatory regime, with validated precedent and workflow routing logic that reflects how decisions actually get made here"—now you've built something defensible.If your AI strategy is "we bought Harvey," that's not a moat. Another company can buy Harvey next quarter and achieve the same results you do.For in-house legal teams:The video warns: "Your margin is my opportunity. If you just wrap a model, someone can 'vibe code' a competitor in a weekend."The Moat Question Every Legal Leader Should AskThat workflow intelligence—captured and made AI-accessible—is what competitors can't replicate by just licensing the same vendor tools.Companies treating this as defensible infrastructure document not just what decisions get made, but how decisions flow through the organization. The routing logic. The stakeholder map. The escalation criteria.Most companies handle this through institutional memory and personal relationships. That works until people leave or teams scale.AI can identify regulatory requirements. But knowing how those requirements interact with your product roadmap, your engineering architecture, your sales process—that's expertise.AI can review contracts. But knowing which contracts need engineering review, which need security approval, which need executive signoff—that's institutional knowledge.3. Cross-Functional Workflow IntelligenceIf that knowledge exists only in individual lawyers' experience, it disappears when people leave. If it's captured systematically, it becomes infrastructure that makes AI exponentially more valuable.Which contract terms actually work for your specific business model? Which regulatory requirements apply to your specific products? Which legal risks does your leadership accept versus avoid?Your legal team does. The question is whether that knowledge is documented in ways that make AI more effective.Generic AI knows contract law and regulatory requirements. It doesn't know your company's specific risk tolerance, product constraints, or go-to-market strategy.2. Product-Specific Risk FrameworksWhen your AI suggests a contract clause, does it know which similar clauses your team has accepted, which you've rejected, and why? That's the moat.But why we approved it, what alternatives we considered, what risks we accepted, what protections we negotiatedNot just "we approved this contract"Companies building defensible legal capabilities capture decisions with enough context that AI can learn from them:Most companies lose this knowledge. The decision gets made, the work moves forward, and the reasoning disappears into email threads and Slack conversations.Every time your legal team makes a decision—accepting a contract term, approving a product feature, defining a compliance standard—that's proprietary data about how legal risk operates at your company.1. Decision History With ContextThree Types of Knowledge That Create MoatsThe question is whether it lives in your systems or just in people's heads.The question isn't whether you have this knowledge. You do.How cross-functional workflows actually operateWhat legal positions you've taken on gray-area issuesWhich regulatory requirements matter for your specific productsHow your leadership team actually makes risk decisionsWhich contract terms work for your specific business modelEvery in-house legal team has proprietary knowledge:The Institutional Knowledge ProblemFor corporate legal teams, the equivalent question is stark: do you have institutional legal knowledge that makes AI more effective for your business than a competitor could achieve using the same vendor tools?The pattern: these companies win because they have proprietary knowledge in domains where regulatory complexity creates barriers.A legal research company aggregated 26 years of Spanish legal records. Revenue quintupled after adding AI. The moat isn't the AI—it's the data that foundation models can't access.A plaintiff attorney platform's moat is case outcome data showing which arguments win and for how much. Public models know the law. This system knows which strategies work in practice. Private data competitors can't access.A debt collection company's moat isn't the AI—it's knowing Missouri statutes versus California statutes in real-time, trained on millions of compliant calls. Competitors can license the same AI models, but they can't access that regulatory compliance data.The video presents three examples:A recent investment analysis examining AI's "Fifth Wave" reveals the answer: when everyone has access to the same AI, the only defensible advantage is proprietary data competitors can't replicate.So what makes your legal team's work defensible?Your company just licensed the same AI tools as your competitors. Same models. Same infrastructure. Same vendor capabilities.