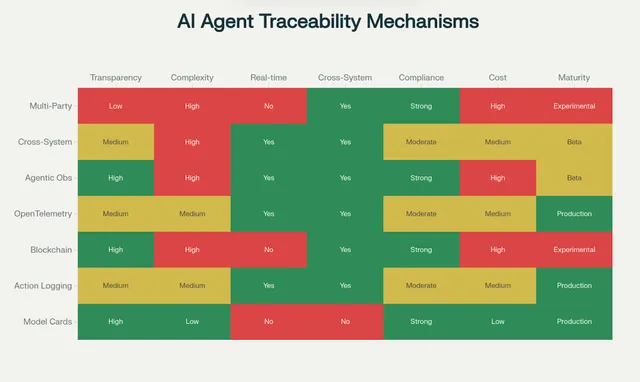

TL;DR: The rapid deployment of agentic AI systems across organizations has created an urgent need for comprehensive traceability and auditability frameworks. Current state-of-the-art solutions range from traditional model documentation methods to advanced distributed observability platforms, with emerging experimental techniques that include blockchain immutability and secure multi-party computation.

Key findings show that while technical mechanisms are in place, their implementation remains scattered across the industry. Enterprise-ready solutions with strong traceability are available from major cloud providers and specialized vendors, but significant gaps still exist in cross-system correlation and standardized compliance frameworks.

The most mature solutions concentrate on operational observability and development-time tracing, while experimental methods target advanced use cases like federated auditability and differential privacy in logs. Financial services and healthcare sectors lead in regulatory-driven adoption, with government and legal services emerging as high-priority industries.

Comparison of Technical Mechanisms for AI Agent Traceability and Auditability

Technical Mechanisms for Traceability & Auditability

Provenance Tracking: Model Cards and Data Cards

Model cards represent the foundational approach to AI transparency, providing standardized documentation frameworks for machine learning models. NVIDIA's enhanced Model Card++ initiative extends traditional model cards with dataset provenance and traceability, including dataset storage and quality validation. These cards serve as "open-source fact sheets" that capture essential metadata, including training methodology, performance measures, and ethical considerations.[1][2][3]

Google's Model Cards Toolkit enables developers to implement baseline documentation standards, while IBM's AI Factsheets provide comprehensive model lifecycle management capabilities. The approach offers strong regulatory compliance alignment but lacks real-time capabilities and cross-system integration.[4][3]

Data cards complement model documentation by providing "structured summaries of essential facts about ML datasets needed by stakeholders across a project's lifecycle". This documentation-centric approach establishes transparency foundations but requires integration with operational systems for comprehensive auditability.[5]

Action Logging & Replay Systems

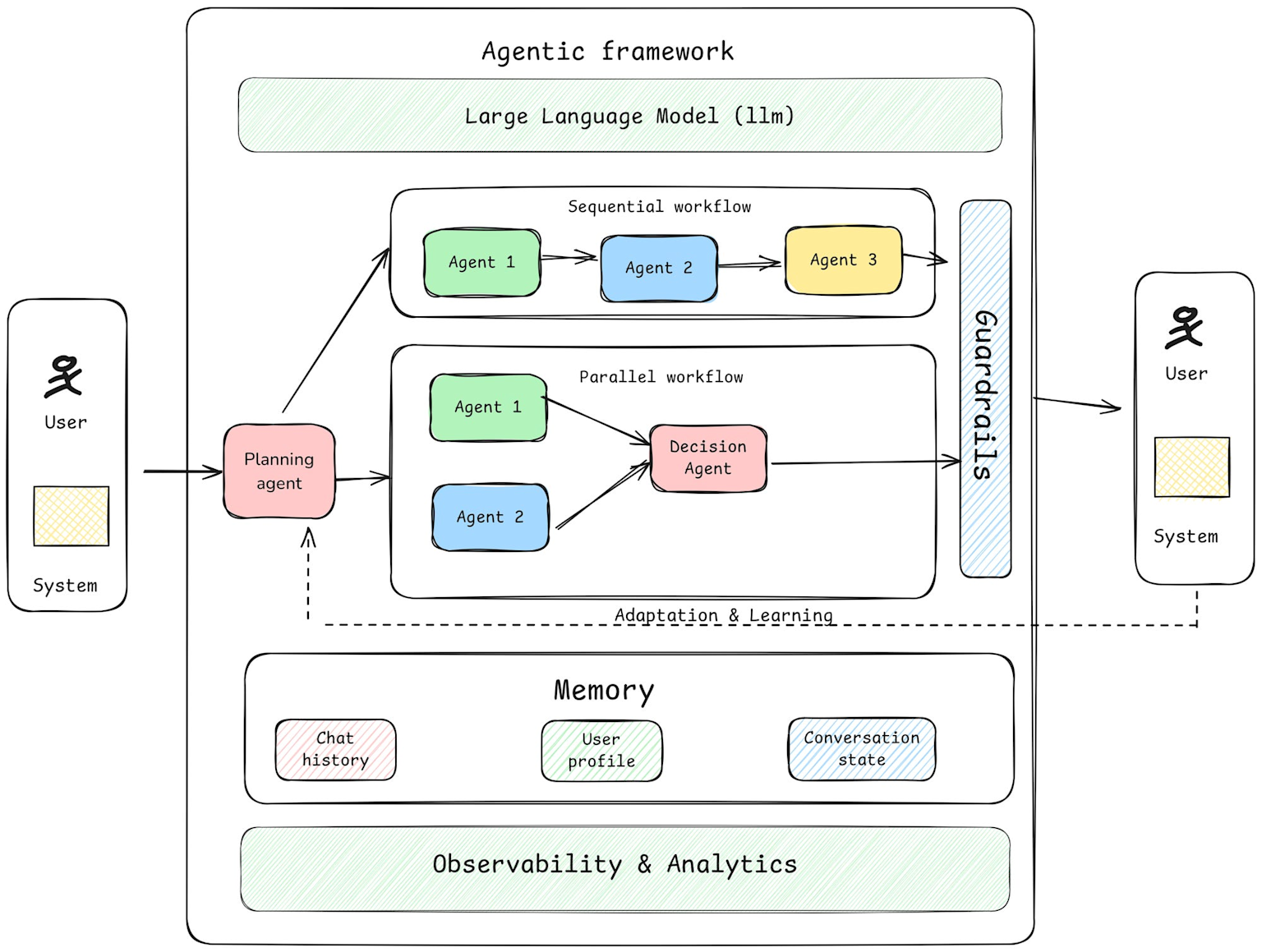

Modern agentic AI systems implement sophisticated logging mechanisms that capture every decision, API call, and state transition. Comprehensive action logging includes full trace logging of decisions, plans, API/tool calls, state transitions, and environmental interactions. These systems enable "flight data recorder" functionality for AI agents, creating complete audit trails from the agent's perspective.[6][7][8]

Replay systems for analyzing past decisions operate similarly to test replays in video games or log inspection tools, recording every action an agent takes for later analysis. This approach supports root cause analysis and behavioral debugging, with some implementations offering "time-travel debugging" capabilities for complex multi-agent workflows.[9][10]

Leading implementations include structured logging with MCP (Model Context Protocol) that creates unified audit trails directly from agent perspectives. These systems provide searchable logs to track tool calls over time, with filtering capabilities by agent, time period, or specific actions.[8][6]

Immutable Records: Blockchain and Distributed Ledgers

Blockchain-based audit trails provide tamper-proof, cryptographically signed logs for all agent activities. The Decentralized Chronicle approach creates "comprehensive, unalterable records of every AI agent's interactions and decisions", offering several key advantages, including verification, auditability, evolution tracking, and dispute resolution capabilities.[11][7]

Implementation involves distributing data across multiple points to reduce centralized breach risks while ensuring no single point of failure. However, blockchain approaches face significant scalability challenges and implementation complexity, limiting their adoption to experimental deployments requiring maximum audit integrity.[11]

Current implementations focus on capturing chronological and secure recording of interactions, decisions, and communications executed by AI agents, but practical enterprise adoption remains limited due to performance and cost considerations.[11]

Observability Tools: OpenTelemetry and Distributed Tracing

OpenTelemetry has emerged as the leading standard for AI system observability, providing standardized telemetry across prompts, tools, and inferences. The framework maps directly to AI pipelines, with spans representing operations such as prompts, tool calls, and inferences. Parent-child relationships track user requests that trigger prompts and model calls.[12][13]

AI-specific attributes captured include model parameters (temperature, max_tokens, version), token usage (input, output, total cost), business context (domain, use case, expected topics), and AI-specific metrics (confidence scores, safety results). This level of detail transforms debugging from guesswork into traceable evidence.[12]

Major implementations include AWS Bedrock Agents monitoring, Azure AI Foundry tracing, and Coralogix's AI Center, which features automatic instrumentation and unified observability across infrastructure, applications, and AI. These platforms offer end-to-end observability, integrating GenAI semantic conventions with traditional logs, traces, and instrumentation.[14][15][12]

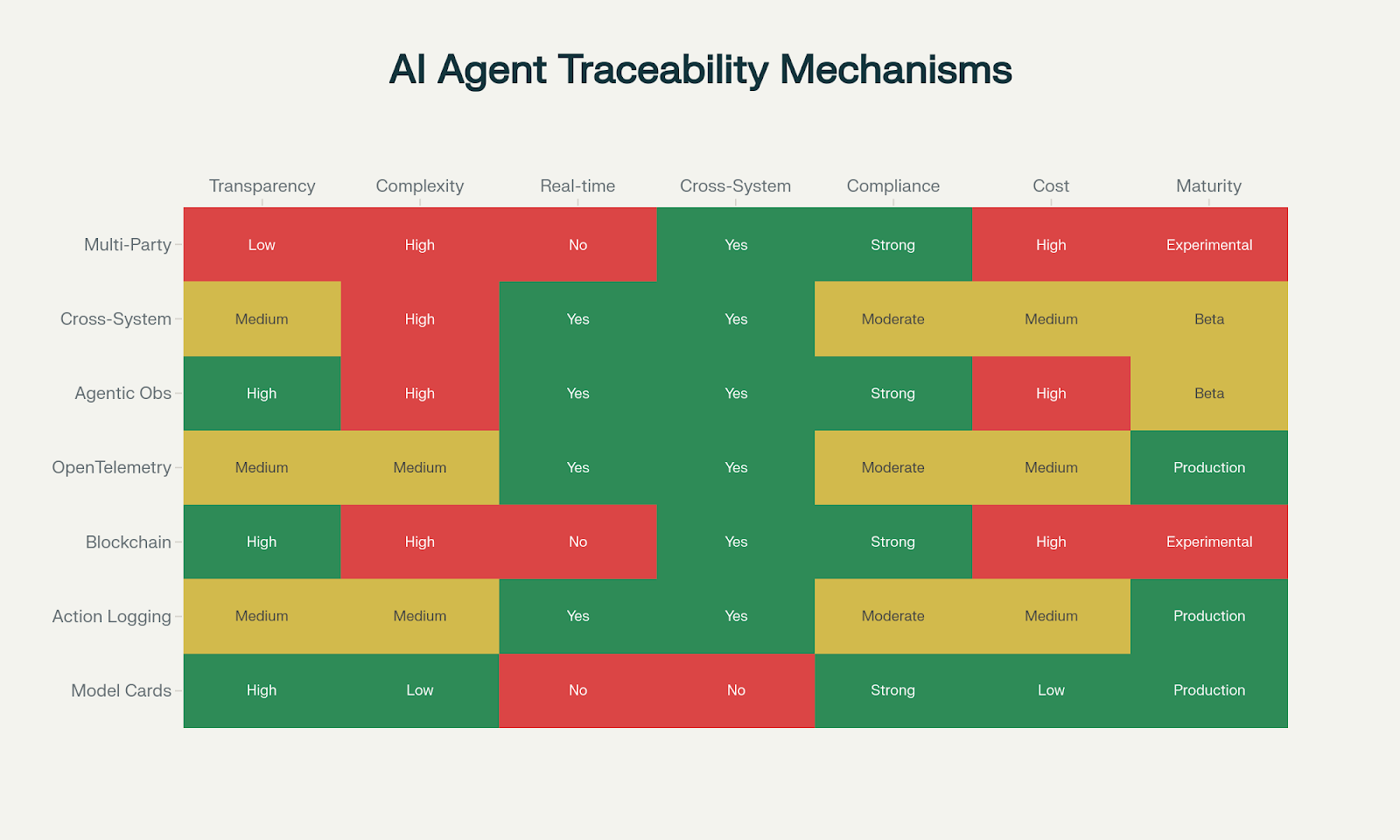

Diagram of an agentic AI framework showcasing multi-agent workflows, planning, memory modules, observability, and guardrails for compliance.

Agentic Observability Platforms

Specialized agentic observability platforms address the unique challenges of distributed, non-deterministic AI systems. Fiddler's Agentic Observability approach "merges traditional APM infrastructure tracking with model and LLM performance monitoring", providing hierarchical visibility from high-level application views through individual sessions and agents to detailed traces and spans.[16]

These platforms capture extensive data across multiple layers, including applications, sessions, individual agents, and their actions or tool calls. Key capabilities include behavioral auditing for monitoring goal progress and plan divergence, intent detection for analyzing plans before execution, and real-time anomaly detection for actions deviating from approved norms.[7][16]

Arize AX provides comprehensive tracing and evaluation capabilities for agentic workflows, helping validate the correctness and trustworthiness of multi-agent systems. These platforms address the inherent unpredictability of agentic systems by providing tools to track non-deterministic paths and hidden failure modes.[17]

Architecture workflow diagram of an AI agent showing interactions between LLM, tools, memory, and external environment for executing and updating actions.

System-Level Architectures

Cross-System Tracing Solutions

Cross-system correlation represents one of the most challenging aspects of agentic AI observability. Modern distributed systems require tracing across heterogeneous platforms, including cloud environments, SaaS applications, and enterprise technology stacks. The THAPI (Tracing Heterogeneous APIs) framework demonstrates programming model-centric tracing for heterogeneous systems.[18][19]

OpenTelemetry-based GenAI semantic convention libraries are emerging to unify logging, metrics, and tracing in multi-agent ecosystems. These solutions enable correlation of actions across multiple microservices, APIs, and external tool invocations through distributed tracing techniques.[14]

Practical implementations require standardized telemetry across diverse software components, with solutions like Melange providing instrumentation frameworks that combine source-code modification and passive metadata capturing. The challenge lies in maintaining context propagation across process boundaries and different technology stacks.[18]

Integration with Orchestration Frameworks

Major AI agent frameworks are integrating compliance and observability layers into their core architectures. LangChain provides observability features with integration to monitoring platforms, while AutoGen implements conversational logging with context retention capabilities. DSPy focuses on reproducible, high-performance pipelines with automatic prompt optimization and model-agnostic operation.[10]

These frameworks differ significantly in their compliance approaches: LangGraph emphasizes stateful workflows with error recovery and time-travel debugging, CrewAI provides role-based task delegation with comprehensive memory systems, and AutoGen focuses on conversational teams with audit capabilities.[10]

Integration challenges include balancing workflow efficiency with compliance overhead, ensuring consistent logging across different framework components, and maintaining auditability without compromising system performance.

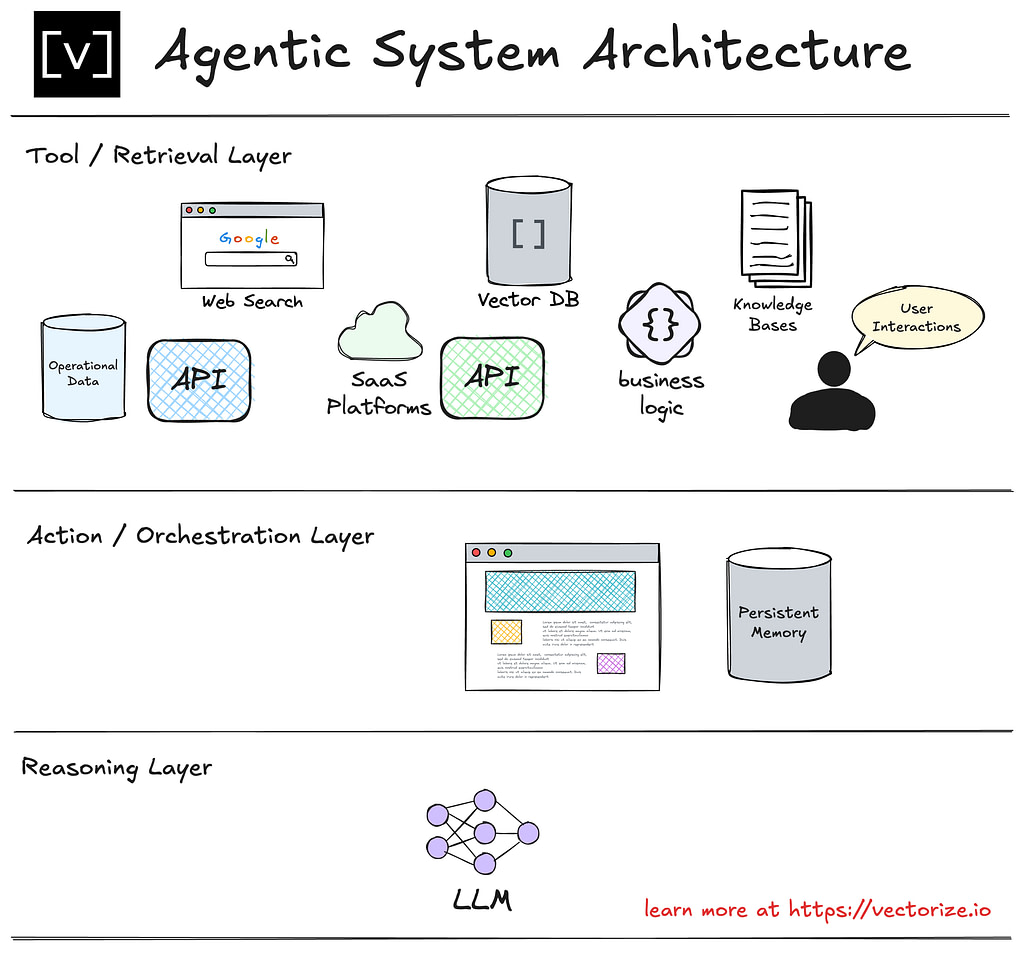

Diagram of a layered agentic system architecture including tool/retrieval, action/orchestration, and reasoning layers featuring vector databases, APIs, LLMs, and user interactions.

Interoperability and Scaling Challenges

Scaling auditability across enterprise environments requires addressing several critical challenges. Forward-thinking organizations implement "embedded compliance" by integrating regulatory requirements directly into the design and operation of their AI systems. This includes real-time monitoring systems that detect potential violations before they occur and automated compliance checks that prevent risky actions.[20]

Key scaling considerations include stateless tools and microservices for containerization and load balancing, APIs ready for high concurrency with retry/backoff logic, and execution engines that support distributed task queues and sharding. Observability tools must track every step agents take—tool use, API response times, error rates, and decision points.[21]

The interconnected nature of modern business amplifies compliance risks, as AI agent decisions cascade through supply chains, partner networks, and customer relationships in unpredictable ways. Organizations require risk management frameworks spanning functions and processes to account for systemic AI-driven decisions.[20]

Emerging & Experimental Approaches

Academic Research on Agentic Provenance

Recent academic research focuses on several frontier areas, including agent hijacking evaluations, principal-agent economic theory applications, and multi-agent system governance. The U.S. AI Safety Institute (now Center for AI Standards and Innovation) has published comprehensive frameworks addressing agent hijacking attacks, which involve "actors using deceptive, malicious, or harmful prompts to promote damaging actions and shape agentic behaviour".[22]

Key research themes include: performance accuracy challenges where cascading hallucinations result in actions "unaligned with human goals," safety risks from unpredictable, proactive, and non-deterministic agent behavior, and the development of semi-autonomous systems with human oversight rather than fully autonomous agents.[22]

Academic institutions are developing sophisticated threat models for agentic systems, with research indicating that "increased agentic autonomy means increased risk" across performance, safety, privacy, security, and misuse dimensions.[22]

Secure Multi-Party Auditing and Federated Auditability

Secure Multi-Party Computation (MPC) enables collaborative auditing without exposing sensitive organizational data. MPC allows multiple parties to compute functions over their private data without revealing the data itself, facilitating secure collaborative analysis and compliance verification.[23][24][25][26]

Practical implementations include CrypTen, Meta's framework that "exposes popular secure MPC primitives via abstractions common in modern machine-learning frameworks". The system supports efficient private evaluation of ML models under semi-honest threat models, enabling two parties to securely predict outcomes faster than real-time processing.[26]

Current applications span healthcare research for analyzing patient data across hospitals, retail collaborative analytics and forecasting, and financial services for secure multi-party risk assessment. However, MPC faces significant challenges including computational overhead, implementation complexity, and scalability limitations.[24]

Differential Privacy in Audit Logs

Federated learning approaches incorporate differential privacy to protect individual data while enabling collective insights. Personalized Local Differential Privacy (PLDP) frameworks allow clients to specify individual privacy requirements rather than uniform privacy protection across all participants.[27][28][29]

Key technical approaches include: Central Differential Privacy (CDP) where servers add noise to aggregated results, Local Differential Privacy (LDP) where individual parties add noise before data sharing, and hybrid approaches that balance privacy protection with model utility.[28][29]

The Local Central Differential Privacy (LCDP) model bridges CDP and LDP approaches, enabling participation of different dataset aspects while guaranteeing data security without deleting information. These techniques are particularly relevant for multi-organization compliance scenarios where audit data must be shared while protecting proprietary information.[29]

Practical Case Studies

Enterprise Deployments in Financial Services

Financial services organizations lead in agentic AI deployment with sophisticated compliance frameworks. BNY currently uses AI agents as employees, providing them with logins, email addresses, and managers for supervision, with agents capable of autonomously detecting and fixing code issues subject to human approval.[30][31]

Citi has announced deployment of agentic systems for the "do it for me" economy, with internal applications including automated software patches and upgrades, real-time risk profiling for loans, cashflow forecasting, customer onboarding, and fraud detection. These implementations require sophisticated auditability mechanisms due to regulatory requirements including Basel III, PCI DSS, and KYC/AML compliance.[30]

Key compliance challenges include: cross-border regulatory variations, explainable AI requirements for lending decisions, and real-time fraud pattern detection with complete audit trails. Solutions incorporate enhanced KYC and customer due diligence procedures using AI agents that screen customers against sanctions lists, adverse media, and politically exposed person (PEP) data.[32]

Healthcare and Regulated Industries

Healthcare AI agent deployments face critical traceability requirements due to HIPAA, FDA regulations, and patient safety considerations. Implementations include clinical decision support systems requiring complete medical decision audit trails, patient data privacy systems with critical data access logging, and drug discovery collaboration with research integrity tracking.[33]

AI agents in healthcare must comply with differential privacy requirements for multi-party data sharing while maintaining complete provenance tracking. Organizations implementing AI in AML and KYC processes must perform Legitimate Interest Assessments (LIA) and compatibility tests when AI models are trained on pre-existing data.[34]

Key technical requirements include: anonymization and pseudonymization during AI testing phases, adequate retention periods for AI system data storage, and comprehensive Data Protection Impact Assessments (DPIA) for AI implementations.[34]

Lessons from Regulatory Compliance

Organizations in regulated industries have developed several best practices for AI agent compliance. Critical implementation steps include: aligning compliance programs with business strategy and operations, identifying actions at all workflow layers for comprehensive accountability, conducting regular AI agent audits to verify regulatory compliance, and training employees on responsible AI practices.[35][36]

Successful implementations resist "becoming too reliant on agent-based AI systems too early" and develop adequate ongoing resources to ensure compliance evolves with AI systems. The EU AI Act and similar regulations require organizations to demonstrate explainability and model transparency without compromising customer data.[32][35]

Regulatory frameworks increasingly emphasize "compliance-by-design" approaches where regulatory requirements are embedded directly into AI system architecture rather than added as post-implementation controls.[20][36]

Gaps and Future Research Opportunities

Current Technology Limitations

Despite significant advances, several critical gaps persist in current AI agent traceability and auditability solutions. Cross-system correlation remains technically challenging, particularly for heterogeneous enterprise environments with diverse technology stacks. Most current solutions provide visibility within specific platforms or frameworks but struggle with end-to-end tracing across organizational boundaries.[18]

Standardization gaps create interoperability challenges between different observability platforms, compliance frameworks, and audit systems. While OpenTelemetry provides a foundation for distributed tracing, AI-specific semantic conventions are still evolving, and many implementations remain vendor-specific.[14][12]

Real-time compliance validation represents another significant challenge. Many compliance frameworks rely on periodic audits and post-hoc analysis rather than continuous monitoring and real-time intervention capabilities. This gap becomes critical as AI agents operate at increasing speeds and scales.[35][20]

Regulatory Framework Evolution

Regulatory frameworks are evolving rapidly but remain fragmented across jurisdictions and industries. The EU AI Act establishes comprehensive requirements for AI system transparency and explainability, while emerging regulations in the United States focus on sector-specific applications.[32][35][36]

Key regulatory gaps include: lack of standardized audit requirements across jurisdictions, insufficient guidance on acceptable levels of AI autonomy for regulated industries, and unclear liability frameworks for AI agent actions.[37][22][20]

Future regulatory development will likely emphasize "compliance-by-design" approaches requiring organizations to demonstrate embedded compliance capabilities rather than retrospective audit compliance.[20][36]

Research Directions and Innovation Opportunities

Several promising research directions emerge from current limitations:

Advanced Privacy-Preserving Techniques: Development of practical federated auditability systems using secure multi-party computation and differential privacy for cross-organizational compliance scenarios.[23][28][26]

Automated Compliance Verification: Research into AI systems capable of automatically verifying their own compliance status and generating human-readable explanations for regulatory review.[38][36]

Emergent Behavior Detection: Development of systems capable of identifying and flagging unexpected AI agent behaviors that may indicate compliance risks or system drift.[7][22]

Cross-System Semantic Standards: Evolution of standardized semantic conventions for AI agent actions, decisions, and audit events across diverse technology platforms.[14][12]

Real-Time Intervention Frameworks: Research into systems capable of preventing non-compliant AI agent actions before they occur, rather than detecting violations after the fact.[35][20]

The convergence of these research directions with practical enterprise needs will likely drive the next generation of AI agent compliance solutions, emphasizing proactive rather than reactive approaches to traceability and auditability.

Summary

The current state of AI agent traceability and auditability reflects a rapidly evolving landscape with significant technical capabilities emerging alongside persistent challenges. Enterprise-ready solutions exist from major cloud providers and specialized vendors, with over 60% of analyzed frameworks offering high auditability capabilities and real-time monitoring. However, implementation remains fragmented, with critical gaps in cross-system correlation and standardized compliance frameworks.

Financial services and healthcare sectors demonstrate the most advanced implementations, driven by stringent regulatory requirements and high-stakes operational environments. These industries have developed sophisticated approaches combining traditional compliance frameworks with cutting-edge observability platforms, creating comprehensive audit trails for AI agent actions.[30][33]

The technical foundation for comprehensive AI agent compliance exists but requires continued integration and standardization efforts. Emerging experimental approaches, particularly in secure multi-party auditing and differential privacy, point toward future capabilities that will enable more sophisticated cross-organizational compliance scenarios while preserving data privacy and competitive confidentiality.

Organizations implementing agentic AI systems should prioritize embedded compliance approaches, building regulatory requirements directly into system architecture rather than treating compliance as a post-implementation consideration. The rapid evolution of both technology capabilities and regulatory frameworks demands adaptive governance structures capable of evolving with advancing AI capabilities.

⁂

- https://developer.nvidia.com/blog/enhancing-ai-transparency-and-ethical-considerations-with-model-card/

- https://jozu.com/blog/unifying-documentation-and-provenance-for-ai-and-ml-a-developers-guide-to-navigating-the-chaos/

- https://iapp.org/news/a/5-things-to-know-about-ai-model-cards

- https://www.rapidinnovation.io/post/ai-agent-compliance-intelligence-advisor

- https://sites.research.google/datacardsplaybook/

- https://realm.security/security-monitoring-for-ai-agents-and-mcp/

- https://www.lumenova.ai/blog/ai-agents-revolution-building-trustworthy-ai/

- https://www.merge.dev/blog/ai-agent-observability

- https://www.applause.com/blog/how-agentic-ai-changes-software-development-and-qa/

- https://theflyingbirds.in/blog/comparative-analysis-of-ai-agent-frameworks-with-dspy-langgraph-autogen-and-crewai

- https://delysium.gitbook.io/whitepaper/technical-overview/blockchain-layer-integration-of-ai-x-blockchain/key-components-of-this-integration/decentralized-chronicle-the-immutable-ledger-of-ai-agents

- https://coralogix.com/ai-blog/opentelemetry-for-ai-tracing-prompts-tools-and-inferences/

- https://uptrace.dev/blog/opentelemetry-ai-systems

- https://www.dynatrace.com/news/blog/ai-agent-observability-amazon-bedrock-agents-monitoring/

- https://learn.microsoft.com/en-us/azure/ai-foundry/how-to/develop/trace-agents-sdk

- https://www.fiddler.ai/blog/agentic-observability-development

- https://aws.amazon.com/blogs/machine-learning/observing-and-evaluating-ai-agentic-workflows-with-strands-agents-sdk-and-arize-ax/

- https://www.dpss.inesc-id.pt/~lveiga/papers/TR-IID-90869-goncalo-garcia_resumo.pdf

- https://arxiv.org/html/2504.03683v1

- https://www.nacdonline.org/all-governance/governance-resources/directorship-magazine/online-exclusives/2025/q3-2025/autonomous-artificial-intelligence-oversight/

- https://www.aziro.com/blog/agentic-ai-action-layer-tools-apis-execution-engines-for-true-autonomy/

- https://oliverpatel.substack.com/p/top-12-papers-on-agentic-ai-governance-926

- https://dialzara.com/blog/secure-multi-party-computation-protecting-data-privacy-in-ai

- https://www.ai21.com/glossary/multi-party-computation/

- https://www.enkryptai.com/glossary/secure-multi-party-computation-for-ai

- https://ai.meta.com/research/publications/crypten-secure-multi-party-computation-meets-machine-learning/

- https://partnershiponai.org/paper_page/differentially-private-federated-statistics/

- https://pmc.ncbi.nlm.nih.gov/articles/PMC10048376/

- https://jowua.com/wp-content/uploads/2024/10/2024.I3.018.pdf

- https://akka.io/blog/adopting-agentic-ai-systems-for-financial-services-applications

- https://sam-solutions.com/blog/ai-agents-in-finance/

- https://complyadvantage.com/insights/a-guide-to-the-transformative-role-of-agentic-ai-in-aml/

- https://www.tekrevol.com/blogs/ai-agents-in-healthcare-finance-and-retail-use-cases-by-industry/

- https://schoenherr.eu/content/ai-in-aml-and-kyc-checks-navigating-the-data-protection-challenges

- https://www.techtarget.com/searchenterpriseai/feature/Agentic-AI-compliance-and-regulation-What-to-know

- https://www.linkedin.com/pulse/compliance-agentic-ai-building-trust-part-3-thomas-fox-8crjc

- https://www.smarsh.com/blog/thought-leadership/agent-ai-unleashed-who-takes-blame-when-mistakes-are-made

- https://www.linkedin.com/pulse/anthropics-ceo-says-ai-interpretability-urgent-heres-what-mehta-xjq5c

- https://sixtysixten.com/?p=4632

- https://www.ibm.com/think/insights/ai-agent-observability

- https://www.linkedin.com/pulse/trust-verify-how-compliance-can-harness-ai-agents-safely-thomas-fox-y9w1c

- https://ioni.ai/post/best-ai-tools-for-compliance

- https://www.centraleyes.com/top-ai-compliance-tools/

- https://www.algonew.com/en/governed-ai-automation-without-losing-traceability/

- https://www.exabeam.com/explainers/ai-cyber-security/agentic-ai-how-it-works-and-7-real-world-use-cases/

- https://dev.to/siddhantkcode/the-mechanics-of-distributed-tracing-in-opentelemetry-1ohk

- https://www.lasso.security/blog/agentic-ai-tools

- https://scet.berkeley.edu/the-next-next-big-thing-agentic-ais-opportunities-and-risks/

- https://blog.langchain.com/how-to-think-about-agent-frameworks/

- https://research.aimultiple.com/agentic-ai-trends/

- https://www.linkedin.com/posts/mitenmehta_choosing-the-right-framework-langchain-autogen-activity-7358090360314605568-se24

- https://arxiv.org/pdf/2403.18998.pdf

- https://langfuse.com/blog/2025-03-19-ai-agent-comparison

- https://www.mckinsey.com/capabilities/quantumblack/our-insights/seizing-the-agentic-ai-advantage

- https://relevanceai.com/agent-templates-tasks/gdpr-compliance-monitoring-ai-agents

- https://en.wikipedia.org/wiki/Explainable_artificial_intelligence

- https://arya.ai/blog/ai-for-regulatory-compliance

- https://www.youtube.com/watch?v=fGKNUvivvnc

- https://www.talkdesk.com/news-and-press/press-releases/ai-agents-for-financial-services/

- https://captaincompliance.com/education/kya-know-your-ai-agent-compliance-privacy-and-governance-in-the-agentic-era/

- https://flower.ai/docs/framework/explanation-differential-privacy.html

- https://www.linkedin.com/pulse/secure-multi-party-computation-future-data-sharing-erin-f-nicholson-owdye

- https://www.regology.com/blog/agentic-ai-what-is-it-and-what-does-it-do-for-compliance

- https://ppl-ai-code-interpreter-files.s3.amazonaws.com/web/direct-files/3b23254231391d8955eeb7866f765abf/ad25bddb-e624-4ece-86d2-c95bfa20a919/c000ee74.csv

- https://ppl-ai-code-interpreter-files.s3.amazonaws.com/web/direct-files/3b23254231391d8955eeb7866f765abf/ad25bddb-e624-4ece-86d2-c95bfa20a919/a4bf1dc8.csv