Agents of change

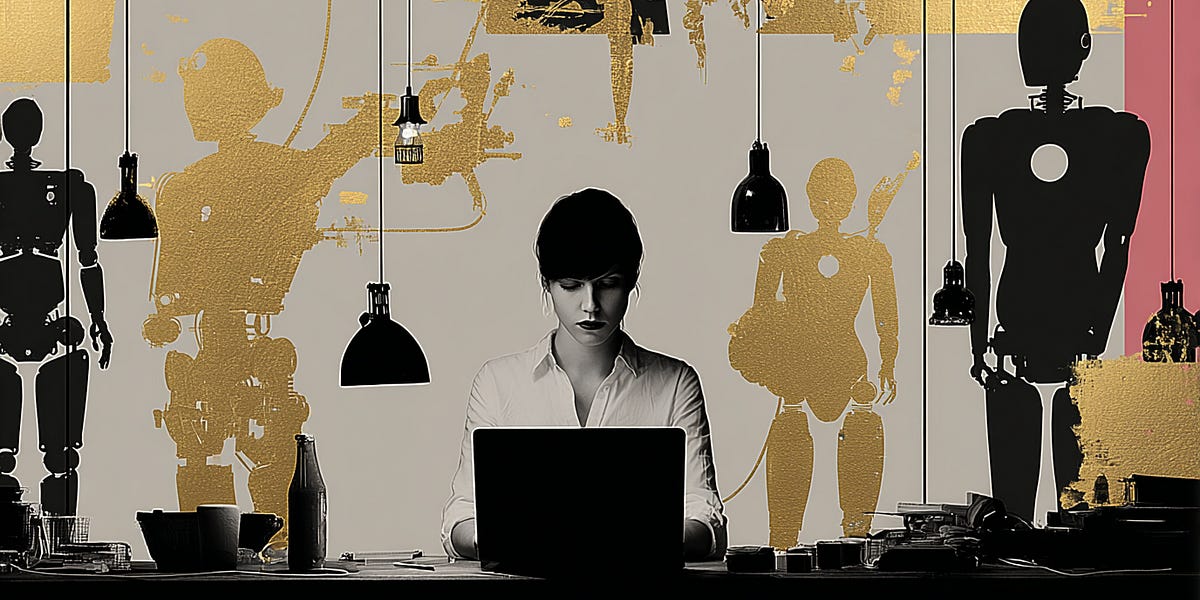

AI agents can do real work or generate chaos. The difference isn't capability—it's human judgment.

Ethan Mollick at One Useful Thing reports on OpenAI's new benchmark that tested AI against industry experts with 14 years of experience on tasks requiring four to seven hours to complete. Humans won, but narrowly—and mainly because AI struggled with formatting instructions, not accuracy or analysis. Mollick then tested Claude Sonnet 4.5 on academic paper replication, a task that typically consumes hours of careful researcher time. Without detailed guidance, Claude read the paper, sorted through data files, converted code from STATA to Python, and reproduced the findings. He verified the results and replicated the replication with another model. Both checked out.

Here's where it gets interesting for product and legal teams: Mollick also asked Claude to turn one corporate memo into PowerPoint presentations. He got 17 versions. The test shows what matters now isn't whether agents can handle substantive work—they can. The question is what we should delegate versus what we merely can delegate. OpenAI's research suggests a workflow: delegate as first pass, review, try corrections if needed, or do it yourself. That workflow could make expert work 40% faster and 60% cheaper while keeping humans in control. Without that discipline, we're headed for organizational chaos where AI generates endless variations nobody needs.

For legal and product teams building with these systems: what gets delegated determines whether agents solve real problems or create new ones.