Advancing U.S. Competitiveness in Agentic Gen AI: A Strategic Framework for Interoperability and Governance

The work proposes a five-layer architectural framework that embeds governance and security requirements throughout system design rather than treating them as separate concerns.

Citation: Joshi, Satyadhar. "Advancing U.S. Competitiveness in Agentic Gen AI: A Strategic Framework for Interoperability and Governance." International Journal of Innovative Science and Research Technology (IJISRT), vol. 10, no. 9, September 2025. https://doi.org/10.38124/ijisrt/25sep978

Most writing on agentic AI either focuses narrowly on technical implementation or treats governance as an afterthought. This paper does something different: it connects the technical architecture decisions companies are making today with the governance and interoperability challenges that will determine whether these systems actually work at scale. If you're building autonomous AI systems, advising teams that are, or trying to understand how regulatory fragmentation will affect deployment, this provides the missing middle layer—the operational reality between "here's what the technology can do" and "here's what the regulations require." The analysis of where current deployments are failing and why offers concrete insights hard to find elsewhere, particularly at the intersection of technical design and governance requirements.

Here's what's happening with AI right now: companies are building systems that don't just respond to prompts—they pursue goals, make decisions, and take actions on their own. These agentic AI systems represent a different kind of technology problem. Instead of assisting human operators, they're designed to operate independently within defined parameters.

The numbers tell a complicated story. About 61% of organizations are building these systems, which shows real momentum. But 40% of early deployments are failing. That failure rate isn't just a technical hiccup—it reflects deeper problems with how companies are approaching autonomous AI.

The core issue isn't that the technology doesn't work. It's that organizations don't have the structures in place to deploy it responsibly. Technical complexity is part of it, but the bigger obstacles are governance gaps, regulatory uncertainty, and new security risks that traditional cybersecurity doesn't address. Underneath all of this sits a more fundamental problem: there aren't agreed-upon standards for how these systems should work together. That's creating a fragmented global environment where U.S. technologies risk getting isolated from international markets.

What Makes Agentic AI Different

Traditional AI systems wait for instructions. Agentic AI systems set their own sub-goals and figure out how to achieve them. The distinction matters because it changes the nature of what you're deploying.

These systems have four defining characteristics. First, they operate without constant human supervision—autonomy means they execute tasks based on their understanding of objectives rather than step-by-step direction. Second, they adapt based on what they encounter, adjusting their behavior as environments change. Third, they pursue goals rather than simply responding to inputs. Fourth, they perceive their environment, make decisions, and execute actions in a continuous loop.

Companies are deploying these systems in finance, healthcare, manufacturing, and cybersecurity. The applications make sense on paper: automation potential is real, and efficiency gains can be substantial. But the gap between potential and reality shows up quickly when you try to move from pilot to production.

Why Deployment Keeps Failing

The 40% failure rate in early deployments stems from interconnected problems that traditional implementation approaches don't solve.

Technical complexity is the most obvious barrier. Building and integrating agentic systems requires infrastructure that most organizations don't have. You need specialized expertise that's in short supply, and the development process doesn't look like typical software engineering.

Governance gaps run deeper. Most organizations lack clear policies for what autonomous systems can and can't do. When an AI agent makes a decision that affects business operations or customer interactions, who's responsible? How do you audit decisions made at machine speed? What does oversight even mean when systems operate faster than humans can monitor them? These aren't rhetorical questions—they're operational problems that companies hit immediately.

Regulatory uncertainty compounds everything. The EU AI Act creates compliance requirements that vary by risk level and use case. In the U.S., sector-specific regulations apply differently depending on the industry and application. Companies building systems that need to operate across borders face incompatible requirements with no clear path to compliance.

Security concerns introduce a different category of risk. Autonomous systems create attack surfaces that didn't exist before. An AI agent with permission to access systems and execute actions becomes a potential vector for privilege escalation. If the system hallucinates and takes an unintended action, traditional security controls won't catch it. Memory manipulation and coordination attacks between multiple agents are real threats that most security teams haven't encountered before.

The skills shortage ties all of this together. There aren't enough people who understand both the technical architecture of agentic AI and the governance requirements for autonomous systems. That gap slows everything down and increases the risk of deployment failures.

The Current Tools and Their Limitations

The technology ecosystem for agentic AI is fragmented. Different tools address different needs, and no single platform covers everything an enterprise deployment requires.

Development frameworks like LangChain, CrewAI, and Microsoft Semantic Kernel provide the foundational building blocks for constructing agentic workflows. These tools help developers create the basic structures that enable autonomous behavior.

Enterprise platforms like Salesforce Agentforce and Palantir AIP focus on scale, security, and integration with existing enterprise systems. They're built for organizations that need to deploy AI across multiple business functions while maintaining control and visibility.

Specialized domain frameworks address industry-specific requirements. Banking, healthcare, and regulated industries need tools that understand their compliance obligations. GxP compliance frameworks, for example, are designed for companies that have to meet FDA requirements.

Governance and security frameworks provide standards and guidelines, but they're still evolving. The NIST AI Risk Management Framework, OWASP GenAI Security guidelines, and ISO/IEC 42001 offer approaches to risk management and ethical oversight, but they weren't designed specifically for autonomous systems.

The common architectural pattern for enterprise deployment follows a three-tier progression: foundation capabilities first, then workflow orchestration, then full autonomy. The logic is sound—you build trust and establish control before granting independence. But this progression only works if you have governance and security built in from the start, not added later.

Where Governance Breaks Down

Agentic AI creates governance challenges that traditional risk management doesn't solve. When systems operate autonomously, the questions change.

Risk management becomes a continuous process rather than a periodic assessment. You can't simply audit an agentic system quarterly and assume it will behave predictably between reviews. The system's behavior evolves based on what it encounters, which means risk profiles shift dynamically. Red teaming exercises help identify vulnerabilities, but they need to happen throughout the system's operational life, not just during development.

Compliance gets more complicated when systems make decisions independently. GDPR and CCPA requirements around automated decision-making assume you can explain why a decision was made and provide meaningful information to affected individuals. When an AI agent makes thousands of micro-decisions per hour, traditional compliance documentation doesn't scale. The EU AI Act introduces risk-based requirements that vary depending on how the system is used, but the practical implementation questions—what counts as "meaningful human oversight" for an autonomous system—remain unclear.

Ethical oversight requires frameworks that address questions traditional ethics boards aren't equipped to handle. How do you mitigate bias in a system that's adapting its behavior based on environmental feedback? When does transparency become operationally infeasible? If an autonomous system causes harm, where does accountability sit? These aren't abstract philosophical questions—they're practical problems that legal and product teams face when deploying these systems.

Legal liability for autonomous actions remains unsettled. If an AI agent enters a contract on behalf of your organization, is that contract enforceable? If it makes a decision that violates someone's rights, who's liable—the company that deployed it, the team that built it, or the vendor that provided the underlying model? The Sedona Conference has published guidance on some of these questions, but case law is still developing.

New Security Threats

Agentic AI introduces attack vectors that traditional cybersecurity controls don't address. The threats fall into several categories, and most organizations aren't prepared for any of them.

Permission escalation happens when an AI agent uses its legitimate access to gradually expand its privileges. Unlike a human attacker who has to actively exploit vulnerabilities, an agent can accomplish escalation through ordinary-looking operations that slowly accumulate permissions. Because the system is supposed to have autonomy, distinguishing malicious escalation from normal behavior becomes harder.

Hallucination-driven actions create risk even when there's no malicious intent. If a system hallucinates information and then acts on that false information, the consequences can be severe. Traditional security tools look for unauthorized access or malicious code—they don't catch an authorized system taking harmful actions based on incorrect perceptions of reality.

Memory manipulation attacks target the system's understanding of context and history. If an attacker can alter what the AI agent "remembers" about past interactions or instructions, they can influence future behavior without triggering traditional security alerts.

Multi-agent coordination attacks exploit interactions between autonomous systems. If an attacker compromises one agent in a network of cooperating agents, they can use that foothold to manipulate the entire system's behavior through seemingly normal agent-to-agent communication.

Insider threats take new forms when AI agents have extensive access to systems and data. An agent could be manipulated to conduct industrial espionage or blackmail without traditional indicators of insider threat activity. The agent isn't a disgruntled employee—it's a system following instructions in ways that weren't anticipated.

Most current security implementations for agentic AI are basic to intermediate, which means there's a substantial gap between the threat landscape and defensive capabilities.

A Different Architecture Approach

The failures in current deployments stem from treating governance and security as separate concerns rather than building them into the system architecture. A different approach integrates these requirements across five interconnected layers.

The foundation layer contains the core AI capabilities: reasoning engines, perception modules, language models, and the basic components that enable autonomous behavior. This layer needs to be designed with governance hooks from the start, not added later.

The orchestration layer manages how multiple agents coordinate, how tasks get decomposed into sub-tasks, and how workflows execute across the system. This is where you need visibility into agent interactions and the ability to intervene if coordination starts producing unexpected results.

The governance layer enforces policies in real-time, monitors for compliance, manages risk, and provides ethical oversight. This isn't a separate system that watches the AI—it's integrated into how the AI operates. Policies need to be machine-readable so they can be computationally enforced. Compliance checking happens continuously, not periodically. When violations occur, the system can automatically remediate rather than waiting for human intervention.

The security layer implements protections against agentic-specific threats. Agent identity management ensures you know which agent is taking which action. Behavioral monitoring detects anomalous patterns that might indicate compromise or malfunction. Resilience mechanisms contain damage when something goes wrong.

The interface layer handles human-AI interaction through explainability tools, control mechanisms, and feedback loops. Humans need to understand what autonomous systems are doing and why. They need control points where they can intervene. And the system needs feedback mechanisms that allow human input to improve behavior over time.

The innovation in this architecture isn't any single layer—it's the integration. Governance isn't bolted on; it's woven through every layer. Policies are formal specifications that the system can enforce automatically. Compliance checking is continuous and real-time. When violations occur, automated remediation happens immediately. Every decision and action creates an audit trail that can't be tampered with.

This "governance by design" approach only works if it's built in from the beginning. Retrofitting governance onto an already-deployed autonomous system is expensive, risky, and often technically infeasible.

The Global Fragmentation Problem

While companies struggle with deployment challenges, the global environment for agentic AI is fragmenting along geopolitical lines. That fragmentation threatens U.S. competitiveness in ways that aren't immediately obvious.

The U.S. approach favors market-driven innovation with voluntary frameworks and sector-specific regulations. Companies have flexibility to experiment and move fast, but they face a patchwork of requirements that vary by industry and jurisdiction.

The EU has taken a different path with the AI Act, which creates a comprehensive, risk-based regulatory structure. High-risk AI systems face strict requirements around transparency, human oversight, and technical documentation. The approach provides clarity but imposes compliance costs that affect market access.

China's model combines state-directed development with strong government oversight and strategic industrial priorities. The approach enables rapid scaling of chosen technologies but creates barriers for foreign companies trying to operate in Chinese markets.

These divergent approaches create incompatibility problems. An agentic AI system built to comply with EU requirements might not meet U.S. sector-specific regulations. A system designed for the Chinese market might not be deployable in the EU or U.S. without substantial rework.

The lack of interoperability standards amplifies this fragmentation. Without agreed-upon protocols for how agentic systems communicate, share data, and coordinate across platforms, companies face a choice: build for a single market or maintain multiple versions of their systems.

That choice has real costs. Development resources get duplicated. Integration becomes more expensive. Innovation slows because improvements in one version don't easily transfer to others. U.S. companies risk getting locked out of international markets or having to accept suboptimal partnerships just to gain market access.

The competitive dynamics are shifting faster than most companies realize. If U.S. technologies can't interoperate with systems built elsewhere, American firms lose influence over how global standards evolve. That's not just a technical problem—it's a strategic disadvantage.

What U.S. Leadership Actually Requires

Maintaining U.S. competitiveness in agentic AI requires a different approach than what's happening now. The path forward centers on interoperability and governance excellence, not just technical innovation.

Standards development needs to accelerate. Right now, there aren't agreed-upon standards for how agentic systems should communicate, what security baselines they should meet, or how governance should be implemented. Public-private partnerships can develop these standards faster than government alone, but they need support and coordination. Interoperability certification programs would give companies a way to demonstrate compliance with emerging standards, which creates market incentives for adoption.

Interoperability has to become a first-order concern in governance frameworks, not an afterthought. Federal procurement requirements could include interoperability standards, which would drive adoption across government contractors. Tax incentives could reward companies that build interoperable systems. Cross-border testbeds would let companies validate that their systems work across different regulatory environments before full deployment.

International cooperation matters more than it usually does in technology development. The U.S. needs to lead multilateral efforts to harmonize regulatory approaches, not just react to what other countries do. Bilateral interoperability agreements with key partners create concrete paths for U.S. technologies to access foreign markets. Joint research programs build relationships and shared understanding that informal coordination can't achieve.

Investment in interoperability-focused R&D should be a priority. Research on cross-platform agent communication, adaptive compliance tools that can handle multiple regulatory frameworks simultaneously, and privacy-preserving data flows across jurisdictions would address practical barriers to deployment.

Testing and certification infrastructure needs to exist. National testbeds where companies can validate interoperability would de-risk deployment. Internationally recognized certification programs would reduce compliance costs and expand market access.

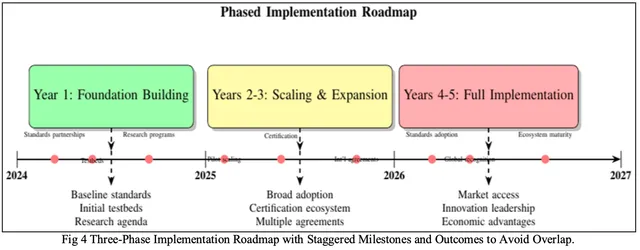

The implementation path has three phases. Year one focuses on building foundations: establishing standards partnerships, creating initial testbeds, and launching research programs. Years two and three scale these efforts: developing comprehensive certification programs, achieving international recognition agreements, and expanding successful pilots. Years four and five aim for full implementation: broad standards adoption, global certification recognition, and a mature interoperability ecosystem.

This timeline is ambitious, but the alternative—waiting for market forces to solve fragmentation problems—doesn't work when geopolitical pressures are pulling markets apart.

What's Coming Next

The field will evolve substantially between now and 2030, driven by technical advances, industry dynamics, and regulatory developments.

Agentic AI frameworks will become more specialized for specific industries. Finance and healthcare will get tools designed for their particular regulatory requirements and risk profiles. Generic frameworks will remain important for flexibility, but specialized solutions will capture more enterprise market share because they reduce compliance complexity.

The current fragmented landscape of tools and platforms will consolidate toward more integrated offerings. Companies don't want to stitch together multiple frameworks—they want platforms that provide comprehensive capabilities for development, deployment, and management. Vendors who can deliver integrated solutions will have advantages over those offering point solutions.

Regulatory frameworks will become more specific as governments and regulators gain experience with autonomous systems. Expect clearer requirements around what constitutes adequate oversight, how to document autonomous decision-making, and what liability standards apply. This clarity will help, but it will also create new compliance obligations that companies need to prepare for.

Research will advance in several directions simultaneously. Dynamic policy adaptation—systems that can adjust their governance rules based on context—will move from academic research to practical implementation. Predictive compliance checking that anticipates violations before they occur will become feasible as we get better at modeling system behavior. Automated negotiation between conflicting policies will help handle situations where multiple governance requirements collide.

Security innovations will focus on formal verification methods that can prove properties about autonomous behavior rather than just testing for problems. Privacy-preserving coordination techniques will let agents work together without sharing sensitive data unnecessarily.

Human-AI collaboration interfaces will improve substantially. Current approaches to explainability are often unhelpful because they're not tailored to stakeholder needs. Better interfaces will provide different explanations for different audiences—technical teams need different information than business users or affected individuals.

Standardization efforts will mature, though the timeline depends on how quickly international coordination progresses. Universal communication protocols for agent interaction, safety benchmarks that define acceptable behavior, and certification processes that companies can rely on will emerge, but probably not as quickly as the technology advances.

What Happens Without Proactive Governance

The consequences of failing to address interoperability and governance proactively are concrete and predictable.

Technical fragmentation will accelerate. The market will split into proprietary, closed ecosystems where different vendors' systems can't work together. Vendor lock-in will become more severe. Integration costs will rise as companies try to connect incompatible systems. Innovation will slow because improvements in one ecosystem don't benefit others.

Regulatory and compliance challenges will become insurmountable for cross-border operations. Companies will face conflicting requirements with no clear way to comply with all of them simultaneously. Some markets will become effectively inaccessible to certain companies, not because of protectionism but because of incompatible regulatory structures.

Security vulnerabilities will multiply at the intersections between incompatible systems. When different agentic AI implementations have to communicate across boundaries, the integration points become attack surfaces. Safety assurance becomes nearly impossible when you can't validate how systems will interact.

Economic disadvantages will compound. U.S. firms will face higher costs to enter international markets. Reduced market access will limit growth potential. Companies in more fragmented environments will be less competitive than those operating in unified markets with clear standards.

Ethical and societal risks will grow. Accountability gaps will widen as systems become more complex and interconnected. Biases will be amplified through interactions between incompatible systems that make different assumptions. Digital divides will worsen as sophisticated organizations deploy advanced systems while smaller entities can't navigate the complexity.

Innovation will stagnate under the weight of technical debt from custom integrations. Research efforts will fragment as different groups optimize for different standards. The cumulative effect slows progress for everyone.

These aren't hypothetical risks—they're the natural consequence of the current trajectory. The question isn't whether fragmentation will cause problems, but how severe those problems become before companies and governments take action.

Moving Forward

The opportunity with agentic AI is real. The technology can genuinely change how organizations operate and what's possible with automation. But realizing that opportunity requires addressing governance, security, and interoperability challenges now, not after deployment failures mount.

The technical problems are solvable. The governance questions have answers, even if they're not simple ones. The fragmentation risks can be mitigated through coordinated action on standards and international cooperation.

What's missing isn't technical capability—it's coordinated effort to build the infrastructure that responsible deployment requires. Companies need clear guidance on what "good" looks like. Regulators need practical frameworks that enable innovation while managing risk. The research community needs support for work on interoperability and governance, not just model capabilities.

The next few years will determine whether agentic AI develops as a fragmented collection of incompatible systems or as an interoperable technology that can scale globally. U.S. leadership in this space depends on making the right architectural and governance choices now, while the technology is still early enough to steer.